Nov 29, 2011

The nuclear-power-generation future is quietly taking shape, at least virtually, through the labors of several hundred scientists and technicians working on the Next Generation Nuclear Plant (NGNP) at the Idaho National Laboratory (INL) in Idaho Falls, ID. Scattered through several research facilities and operating sites, these experts are wrestling with dozens of questions—from technology evaluations to site licensing to spent fuels—that accompany any extension of nuclear power.

|

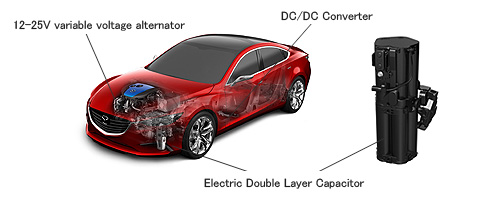

| High-temperature gas-cooled reactor. Image courtesy of Idaho National Laboratory (INL). |

NGNP is far more than an extension: it is a radical step forward for nuclear power. It will be the first truly new reactor design to go into commercial service in the U.S. in decades; it is to be up and running by September 2021. The way forward may not be smooth. Cost estimates range from $4 billion to nearly $7 billion and who pays for what remains unsettled. Nevertheless, barring a technical crunch, a licensing snag, or a financial meltdown, NGNP could become a cornerstone of an energy future with abundant electricity and drastically reduced carbon emissions.

The reactor initiative is for a high-temperature gas-cooled reactor or HTGC (sometimes abbreviated as HTGR), a graphite-moderated and helium-cooled design backed by considerable engineering development in Japan, China, Russia, South Africa, and, in the U.S. by General Atomics, Inc. The primary goal of the project is to commercialize HTGCs. Experts put the potential market at several hundred reactors if most coal-fired power plants are replaced.

|

| Researcher at Idaho National Laboratory (INL). |

Running NGNP is what the U.S. Department of Energy calls the NGNP Industry Alliance. Members include many of power-generation’s biggest names: General Atomics; Areva NP; Babcock & Wilcox; Westinghouse Electric Co.; SGL Group, a German producer of graphite and carbon products; and Entergy Nuclear. Entergy owns, operates, or manages 12 of the 104 power-gen reactors in the U.S. and is expected to handle licensing. These firms’ operations and expertise span the industry.

Further backing comes from the consortium that operates INL itself. Its members are Battelle Energy Alliance / Battelle Memorial Institute; Babcock & Wilcox; Washington Group International / URS Corp.; Massachusetts Institute of Technology; and the Electric Power Research Institute.

The high-temperature reference is to the reactor’s outlet temperature, about 1,000 °C, or very roughly three times higher than most of today’s reactors. That means HTGCs can be a source of low-carbon, high-temperature process heat for petroleum refining, biofuels production, the production of fertilizer and chemical feedstocks, and reprocessing coal into other fuels, among other uses. This is why the NGNP alliance includes Dow Chemical, Eastman Chemical, ConocoPhillips, Potash Corp., and the Petroleum Technology Alliance of Canada. All are potential customers for NGNP’s clean heat.

The NGNP Industry Alliance’s HTGC is an integral part of the Generation IV International Forum (GIF). Founded in 2000, GIF is a broadly based international effort to put nuclear power to widespread use for base-load electricity generation and low-cost heat for industrial processes. The other five Generation IV designs are molten-salt reactors, sodium-cooled fast, supercritical water-cooled, gas-cooled fast, and lead-cooled fast. (“Fast” refers to a portion of the neutron spectrum.)

Improvements to existing reactors of 2000 and later are classed as Generation III reactors. They have:

- standardized type designs to expedite licensing, reduce capital costs, and speed construction. Gen II’s were largely custom-built.

- simpler, more rugged designs for less complicated operation and lower vulnerability to operational problems.

- higher availability with fewer, shorter outages and operating lives stretching 60 years.

- better resistance to damage from possible core melts and aircraft impact.

- "grace periods" of 72 hours; a shutdown plant requires no active intervention for the first 72 hours in part because of passive or inherent safety features that rely on gravity, natural convection, or resistance to high temperatures.

- higher "burn up" to reduce fuel use and the amount of waste.

There is also a Gen III-plus group of about a dozen reactor designs in advanced planning stages. Today’s operating units, mostly built since 1970, are second generation. The first generation was 1950 - 1970 prototypes and demonstration units.

Despite optimistic long-term prospects for NGNP and Gen-IV, the nuclear industry’s critics raise two objections. First, safety risks may be greater initially with new reactor types as reactor operators will have had little experience with the new design. Second, fabrication, construction, and maintenance of new reactors can be expected to have a steep learning curve. Advanced technologies always carry a higher risk of accidents and mistakes than predecessors. Established technologies grow safer with accumulated experience and lessons-learned.

The NGNP program envisions dozens of these reactors by 2050. In contrast to today’s power-generation reactors and their enormous concrete-and-steel containment structures, these reactors may be nearly invisible. They will be underground in concrete silos 150 feet deep.

Meanwhile, ASME is playing a major role in NGNP research on metal alloys that can withstand the reactors’ extremely high outlet temperatures. The alloys under consideration are 800H (iron-nickel-chromium), Grade 91 steel (chromium–molybdenum) and Haynes International’s Hastelloy XR (nickel-chromium-iron-molybdenum). The work is being carried out by ASME Standards Technology LLC under an agreement with the U.S. Department of Energy.

Source: ASME