Engineerblogger

July 19, 2012

Barrels and cones dot an open field in Saline, Mich., forming an obstacle course for a modified vehicle. A driver remotely steers the vehicle through the course from a nearby location as a researcher looks on. Occasionally, the researcher instructs the driver to keep the wheel straight — a trajectory that appears to put the vehicle on a collision course with a barrel. Despite the driver’s actions, the vehicle steers itself around the obstacle, transitioning control back to the driver once the danger has passed.

The key to the maneuver is a new semiautonomous safety system developed by Sterling Anderson, a PhD student in MIT’s Department of Mechanical Engineering, and Karl Iagnemma, a principal research scientist in MIT’s Robotic Mobility Group.

The system uses an onboard camera and laser rangefinder to identify hazards in a vehicle’s environment. The team devised an algorithm to analyze the data and identify safe zones — avoiding, for example, barrels in a field, or other cars on a roadway. The system allows a driver to control the vehicle, only taking the wheel when the driver is about to exit a safe zone.

Anderson, who has been testing the system in Michigan since last September, describes it as an “intelligent co-pilot” that monitors a driver’s performance and makes behind-the-scenes adjustments to keep the vehicle from colliding with obstacles, or within a safe region of the environment, such as a lane or open area.

“The real innovation is enabling the car to share [control] with you,” Anderson says. “If you want to drive, it’ll just … make sure you don’t hit anything.”

The group presented details of the safety system recently at the Intelligent Vehicles Symposium in Spain.

Off the beaten path

Robotics research has focused in recent years on developing systems — from cars to medical equipment to industrial machinery — that can be controlled by either robots or humans. For the most part, such systems operate along preprogrammed paths.

As an example, Anderson points to the technology behind self-parking cars. To parallel park, a driver engages the technology by flipping a switch and taking his hands off the wheel. The car then parks itself, following a preplanned path based on the distance between neighboring cars.

While a planned path may work well in a parking situation, Anderson says when it comes to driving, one or even multiple paths is far too limiting.

“The problem is, humans don’t think that way,” Anderson says. “When you and I drive, [we don’t] choose just one path and obsessively follow it. Typically you and I see a lane or a parking lot, and we say, ‘Here is the field of safe travel, here’s the entire region of the roadway I can use, and I’m not going to worry about remaining on a specific line, as long as I’m safely on the roadway and I avoid collisions.’”

Anderson and Iagnemma integrated this human perspective into their robotic system. The team came up with an approach to identify safe zones, or “homotopies,” rather than specific paths of travel. Instead of mapping out individual paths along a roadway, the researchers divided a vehicle’s environment into triangles, with certain triangle edges representing an obstacle or a lane’s boundary.

The researchers devised an algorithm that “constrains” obstacle-abutting edges, allowing a driver to navigate across any triangle edge except those that are constrained. If a driver is in danger of crossing a constrained edge — for instance, if he’s fallen asleep at the wheel and is about to run into a barrier or obstacle — the system takes over, steering the car back into the safe zone.

Building trust

So far, the team has run more than 1,200 trials of the system, with few collisions; most of these occurred when glitches in the vehicle’s camera failed to identify an obstacle. For the most part, the system has successfully helped drivers avoid collisions.

Benjamin Saltsman, manager of intelligent truck vehicle technology and innovation at Eaton Corp., says the system has several advantages over fully autonomous variants such as the self-driving cars developed by Google and Ford. Such systems, he says, are loaded with expensive sensors, and require vast amounts of computation to plan out safe routes.

"The implications of [Anderson's] system is it makes it lighter in terms of sensors and computational requirements than what a fully autonomous vehicle would require," says Saltsman, who was not involved in the research. "This simplification makes it a lot less costly, and closer in terms of potential implementation."

In experiments, Anderson has also observed an interesting human response: Those who trust the system tend to perform better than those who don’t. For instance, when asked to hold the wheel straight, even in the face of a possible collision, drivers who trusted the system drove through the course more quickly and confidently than those who were wary of the system.

And what would the system feel like for someone who is unaware that it’s activated? “You would likely just think you’re a talented driver,” Anderson says. “You’d say, ‘Hey, I pulled this off,’ and you wouldn’t know that the car is changing things behind the scenes to make sure the vehicle remains safe, even if your inputs are not.”

He acknowledges that this isn’t necessarily a good thing, particularly for people just learning to drive; beginners may end up thinking they are better drivers than they actually are. Without negative feedback, these drivers can actually become less skilled and more dependent on assistance over time. On the other hand, Anderson says expert drivers may feel hemmed in by the safety system. He and Iagnemma are now exploring ways to tailor the system to various levels of driving experience.

The team is also hoping to pare down the system to identify obstacles using a single cellphone. “You could stick your cellphone on the dashboard, and it would use the camera, accelerometers and gyro to provide the feedback needed by the system,” Anderson says. “I think we’ll find better ways of doing it that will be simpler, cheaper and allow more users access to the technology.”

This research was supported by the United States Army Research Office and the Defense Advanced Research Projects Agency. The experimental platform was developed in collaboration with Quantum Signal LLC with assistance from James Walker, Steven Peters and Sisir Karumanchi.

Source: MIT News

Thursday 19 July 2012

Autonomous robot scans ship hulls for mines

MIT News

July 17, 2012

July 17, 2012

|

| Algorithms developed by MIT researchers enable an autonomous underwater vehicle (AUV) to swim around and reconstruct a ship's propeller.

Image: Franz Hover, Brendan Englo

|

For years, the U.S. Navy has employed human divers, equipped with sonar cameras, to search for underwater mines attached to ship hulls. The Navy has also trained dolphins and sea lions to search for bombs on and around vessels. While animals can cover a large area in a short amount of time, they are costly to train and care for, and don’t always perform as expected.

In the last few years, Navy scientists, along with research institutions around the world, have been engineering resilient robots for minesweeping and other risky underwater missions. The ultimate goal is to design completely autonomous robots that can navigate and map cloudy underwater environments — without any prior knowledge of those environments — and detect mines as small as an iPod.

Now Franz Hover, the Finmeccanica Career Development Associate Professor in the Department of Mechanical Engineering, and graduate student Brendan Englot have designed algorithms that vastly improve such robots’ navigation and feature-detecting capabilities. Using the group’s algorithms, the robot is able to swim around a ship’s hull and view complex structures such as propellers and shafts. The goal is to achieve a resolution fine enough to detect a 10-centimeter mine attached to the side of a ship.

“A mine this small may not sink the vessel or cause loss of life, but if it bends the shaft, or damages the bearing, you still have a big problem,” Hover says. “The ability to ensure that the bottom of the boat doesn’t have a mine attached to it is really critical to vessel security today.”

Hover and his colleagues have detailed their approach in a paper to appear in theInternational Journal of Robotics Research.

In the last few years, Navy scientists, along with research institutions around the world, have been engineering resilient robots for minesweeping and other risky underwater missions. The ultimate goal is to design completely autonomous robots that can navigate and map cloudy underwater environments — without any prior knowledge of those environments — and detect mines as small as an iPod.

Now Franz Hover, the Finmeccanica Career Development Associate Professor in the Department of Mechanical Engineering, and graduate student Brendan Englot have designed algorithms that vastly improve such robots’ navigation and feature-detecting capabilities. Using the group’s algorithms, the robot is able to swim around a ship’s hull and view complex structures such as propellers and shafts. The goal is to achieve a resolution fine enough to detect a 10-centimeter mine attached to the side of a ship.

“A mine this small may not sink the vessel or cause loss of life, but if it bends the shaft, or damages the bearing, you still have a big problem,” Hover says. “The ability to ensure that the bottom of the boat doesn’t have a mine attached to it is really critical to vessel security today.”

Hover and his colleagues have detailed their approach in a paper to appear in theInternational Journal of Robotics Research.

Posted by

Unknown

0

comments

Labels:

Defence,

Defense,

Education,

Robotic Technology,

Technology

Why platinum is the wrong material for fuel cell?

Engineerblogger

July 19, 2012

July 19, 2012

|

| Professor Alfred Anderson |

Fuel cells are inefficient because the catalyst most commonly used to convert chemical energy to electricity is made of the wrong material, a researcher at Case Western Reserve University argues. Rather than continue the futile effort to tweak that material—platinum—to make it work better, Chemistry Professor Alfred Anderson urges his colleagues to start anew.

“Using platinum is like putting a resistor in the system,” he said. Anderson freely acknowledges he doesn’t know what the right material is, but he’s confident researchers’ energy would be better spent seeking it out than persisting with platinum.

“If we can find a catalyst that will do this [more efficiently],” he said, “it would reach closer to the limiting potential and get more energy out of the fuel cell.”

Anderson’s analysis and a guide for a better catalyst have been published in a recent issue of Physical Chemistry Chemical Physics and in Electrocatalysis online.

Even in the best of circumstances, Anderson explained, the chemical reaction that produces energy in a fuel cell—like those being tested by some car companies—ends up wasting a quarter of the energy that could be transformed into electricity. This point is well recognized in the scientific community, but, to date, efforts to address the problem have proved fruitless.

Anderson blames the failure on a fundamental misconception as to the reason for the energy waste. The most widely accepted theory says impurities are binding to the platinum surface of the cathode and blocking the desired reaction.

“The decades-old surface-poisoning explanation is lame because there is more to the story,” Anderson said.

To understand the loss of energy, Anderson used data derived from oxygen-reduction experiments to calculate the optimal bonding strengths between platinum and intermediate molecules formed during the oxygen-reduction reaction. The reaction takes place at the platinum-coated cathode.

He found the intermediate molecules bond too tightly or too loosely to the cathode surface, slowing the reaction and causing a drop in voltage. The result is the fuel cell produces about .93 volts instead of the potential maximum of 1.23 volts.

To eliminate the loss, calculations show, the catalyst should have bonding strengths tailored so that all reactions taking place during oxygen reduction occur at or as near to 1.23 volts as possible.

Anderson said the use of volcano plots, which are a statistical tool for comparing catalysts, has actually misguided the search for the best one. “They allow you to grade a series of similar catalysts, but they don’t point to better catalysts.”

He said a catalyst made of copper laccase, a material found in trees and fungi, has the desired bonding strength but lacks stability. Finding a catalyst that has both is the challenge.

Anderson is working with other researchers exploring alternative catalysts as well as an alternative reaction pathway in an effort to increase efficiency.

“Using platinum is like putting a resistor in the system,” he said. Anderson freely acknowledges he doesn’t know what the right material is, but he’s confident researchers’ energy would be better spent seeking it out than persisting with platinum.

“If we can find a catalyst that will do this [more efficiently],” he said, “it would reach closer to the limiting potential and get more energy out of the fuel cell.”

Anderson’s analysis and a guide for a better catalyst have been published in a recent issue of Physical Chemistry Chemical Physics and in Electrocatalysis online.

Even in the best of circumstances, Anderson explained, the chemical reaction that produces energy in a fuel cell—like those being tested by some car companies—ends up wasting a quarter of the energy that could be transformed into electricity. This point is well recognized in the scientific community, but, to date, efforts to address the problem have proved fruitless.

Anderson blames the failure on a fundamental misconception as to the reason for the energy waste. The most widely accepted theory says impurities are binding to the platinum surface of the cathode and blocking the desired reaction.

“The decades-old surface-poisoning explanation is lame because there is more to the story,” Anderson said.

To understand the loss of energy, Anderson used data derived from oxygen-reduction experiments to calculate the optimal bonding strengths between platinum and intermediate molecules formed during the oxygen-reduction reaction. The reaction takes place at the platinum-coated cathode.

He found the intermediate molecules bond too tightly or too loosely to the cathode surface, slowing the reaction and causing a drop in voltage. The result is the fuel cell produces about .93 volts instead of the potential maximum of 1.23 volts.

To eliminate the loss, calculations show, the catalyst should have bonding strengths tailored so that all reactions taking place during oxygen reduction occur at or as near to 1.23 volts as possible.

Anderson said the use of volcano plots, which are a statistical tool for comparing catalysts, has actually misguided the search for the best one. “They allow you to grade a series of similar catalysts, but they don’t point to better catalysts.”

He said a catalyst made of copper laccase, a material found in trees and fungi, has the desired bonding strength but lacks stability. Finding a catalyst that has both is the challenge.

Anderson is working with other researchers exploring alternative catalysts as well as an alternative reaction pathway in an effort to increase efficiency.

Source: Case Western Reserve University

Posted by

Unknown

0

comments

Labels:

Energy,

Fuel Cell,

Materials,

Research and Development

Researchers Create Highly Conductive and Elastic Conductors Using Silver Nanowires

Engineerblogger

July 19, 2012

Researchers from North Carolina State University have developed highly conductive and elastic conductors made from silver nanoscale wires (nanowires). These elastic conductors can be used to develop stretchable electronic devices.

Stretchable circuitry would be able to do many things that its rigid counterpart cannot. For example, an electronic “skin” could help robots pick up delicate objects without breaking them, and stretchable displays and antennas could make cell phones and other electronic devices stretch and compress without affecting their performance. However, the first step toward making such applications possible is to produce conductors that are elastic and able to effectively and reliably transmit electric signals regardless of whether they are deformed.

Dr. Yong Zhu, an assistant professor of mechanical and aerospace engineering at NC State, and Feng Xu, a Ph.D. student in Zhu’s lab have developed such elastic conductors using silver nanowires.

Silver has very high electric conductivity, meaning that it can transfer electricity efficiently. And the new technique developed at NC State embeds highly conductive silver nanowires in a polymer that can withstand significant stretching without adversely affecting the material’s conductivity. This makes it attractive as a component for use in stretchable electronic devices.

“This development is very exciting because it could be immediately applied to a broad range of applications,” Zhu said. “In addition, our work focuses on high and stable conductivity under a large degree of deformation, complementary to most other work using silver nanowires that are more concerned with flexibility and transparency.”

“The fabrication approach is very simple,” says Xu. Silver nanowires are placed on a silicon plate. A liquid polymer is poured over the silicon substrate. The polymer is then exposed to high heat, which turns the polymer from a liquid into an elastic solid. Because the polymer flows around the silver nanowires when it is in liquid form, the nanowires are trapped in the polymer when it becomes solid. The polymer can then be peeled off the silicon plate.

“Also silver nanowires can be printed to fabricate patterned stretchable conductors,” Xu says. The fact that it is easy to make patterns using the silver nanowire conductors should facilitate the technique’s use in electronics manufacturing.

When the nanowire-embedded polymer is stretched and relaxed, the surface of the polymer containing nanowires buckles. The end result is that the composite is flat on the side that contains no nanowires, but wavy on the side that contains silver nanowires.

After the nanowire-embedded surface has buckled, the material can be stretched up to 50 percent of its elongation, or tensile strain, without affecting the conductivity of the silver nanowires. This is because the buckled shape of the material allows the nanowires to stay in a fixed position relative to each other, even as the polymer is being stretched.

“In addition to having high conductivity and a large stable strain range, the new stretchable conductors show excellent robustness under repeated mechanical loading,” Zhu says. Other reported stretchable conductive materials are typically deposited on top of substrates and could delaminate under repeated mechanical stretching or surface rubbing.

The paper, “Highly Conductive and Stretchable Silver Nanowire Conductors,” was published in Advanced Materials. The research was supported by the National Science Foundation.

Source: North Carolina State University

July 19, 2012

|

The silver nanowires can be printed to fabricate patterned stretchable conductors.

|

Stretchable circuitry would be able to do many things that its rigid counterpart cannot. For example, an electronic “skin” could help robots pick up delicate objects without breaking them, and stretchable displays and antennas could make cell phones and other electronic devices stretch and compress without affecting their performance. However, the first step toward making such applications possible is to produce conductors that are elastic and able to effectively and reliably transmit electric signals regardless of whether they are deformed.

Dr. Yong Zhu, an assistant professor of mechanical and aerospace engineering at NC State, and Feng Xu, a Ph.D. student in Zhu’s lab have developed such elastic conductors using silver nanowires.

Silver has very high electric conductivity, meaning that it can transfer electricity efficiently. And the new technique developed at NC State embeds highly conductive silver nanowires in a polymer that can withstand significant stretching without adversely affecting the material’s conductivity. This makes it attractive as a component for use in stretchable electronic devices.

“This development is very exciting because it could be immediately applied to a broad range of applications,” Zhu said. “In addition, our work focuses on high and stable conductivity under a large degree of deformation, complementary to most other work using silver nanowires that are more concerned with flexibility and transparency.”

“The fabrication approach is very simple,” says Xu. Silver nanowires are placed on a silicon plate. A liquid polymer is poured over the silicon substrate. The polymer is then exposed to high heat, which turns the polymer from a liquid into an elastic solid. Because the polymer flows around the silver nanowires when it is in liquid form, the nanowires are trapped in the polymer when it becomes solid. The polymer can then be peeled off the silicon plate.

“Also silver nanowires can be printed to fabricate patterned stretchable conductors,” Xu says. The fact that it is easy to make patterns using the silver nanowire conductors should facilitate the technique’s use in electronics manufacturing.

When the nanowire-embedded polymer is stretched and relaxed, the surface of the polymer containing nanowires buckles. The end result is that the composite is flat on the side that contains no nanowires, but wavy on the side that contains silver nanowires.

After the nanowire-embedded surface has buckled, the material can be stretched up to 50 percent of its elongation, or tensile strain, without affecting the conductivity of the silver nanowires. This is because the buckled shape of the material allows the nanowires to stay in a fixed position relative to each other, even as the polymer is being stretched.

“In addition to having high conductivity and a large stable strain range, the new stretchable conductors show excellent robustness under repeated mechanical loading,” Zhu says. Other reported stretchable conductive materials are typically deposited on top of substrates and could delaminate under repeated mechanical stretching or surface rubbing.

The paper, “Highly Conductive and Stretchable Silver Nanowire Conductors,” was published in Advanced Materials. The research was supported by the National Science Foundation.

Source: North Carolina State University

Posted by

Unknown

0

comments

Researchers develop “nanorobot” that can be programmed to target different diseases

Engineerblogger

July 19, 2012

University of Florida researchers have moved a step closer to treating diseases on a cellular level by creating a tiny particle that can be programmed to shut down the genetic production line that cranks out disease-related proteins.

In laboratory tests, these newly created “nanorobots” all but eradicated hepatitis C virus infection. The programmable nature of the particle makes it potentially useful against diseases such as cancer and other viral infections.

The research effort, led by Y. Charles Cao, a UF associate professor of chemistry, and Dr. Chen Liu, a professor of pathology and endowed chair in gastrointestinal and liver research in the UF College of Medicine, is described online this week in the Proceedings of the National Academy of Sciences.

“This is a novel technology that may have broad application because it can target essentially any gene we want,” Liu said. “This opens the door to new fields so we can test many other things. We’re excited about it.”

During the past five decades, nanoparticles — particles so small that tens of thousands of them can fit on the head of a pin — have emerged as a viable foundation for new ways to diagnose, monitor and treat disease. Nanoparticle-based technologies are already in use in medical settings, such as in genetic testing and for pinpointing genetic markers of disease. And several related therapies are at varying stages of clinical trial.

The Holy Grail of nanotherapy is an agent so exquisitely selective that it enters only diseased cells, targets only the specified disease process within those cells and leaves healthy cells unharmed.

To demonstrate how this can work, Cao and colleagues, with funding from the National Institutes of Health, the Office of Naval Research and the UF Research Opportunity Seed Fund, created and tested a particle that targets hepatitis C virus in the liver and prevents the virus from making copies of itself.

Hepatitis C infection causes liver inflammation, which can eventually lead to scarring and cirrhosis. The disease is transmitted via contact with infected blood, most commonly through injection drug use, needlestick injuries in medical settings, and birth to an infected mother. More than 3 million people in the United States are infected and about 17,000 new cases are diagnosed each year, according to the Centers for Disease Control and Prevention. Patients can go many years without symptoms, which can include nausea, fatigue and abdominal discomfort.

Current hepatitis C treatments involve the use of drugs that attack the replication machinery of the virus. But the therapies are only partially effective, on average helping less than 50 percent of patients, according to studies

published in The New England Journal of Medicine and other journals. Side effects vary widely from one medication to another, and can include flu-like symptoms, anemia and anxiety.

Cao and colleagues, including graduate student Soon Hye Yang and postdoctoral associates Zhongliang Wang, Hongyan Liu and Tie Wang, wanted to improve on the concept of interfering with the viral genetic material in a way that boosted therapy effectiveness and reduced side effects.

The particle they created can be tailored to match the genetic material of the desired target of attack, and to sneak into cells unnoticed by the body’s innate defense mechanisms.

Recognition of genetic material from potentially harmful sources is the basis of important treatments for a number of diseases, including cancer, that are linked to the production of detrimental proteins. It also has potential for use in detecting and destroying viruses used as bioweapons.

The new virus-destroyer, called a nanozyme, has a backbone of tiny gold particles and a surface with two main biological components. The first biological portion is a type of protein called an enzyme that can destroy the genetic recipe-carrier, called mRNA, for making the disease-related protein in question. The other component is a large molecule called a DNA oligonucleotide that recognizes the genetic material of the target to be destroyed and instructs its neighbor, the enzyme, to carry out the deed. By itself, the enzyme does not selectively attack hepatitis C, but the combo does the trick.

“They completely change their properties,” Cao said.

In laboratory tests, the treatment led to almost a 100 percent decrease in hepatitis C virus levels. In addition, it did not trigger the body’s defense mechanism, and that reduced the chance of side effects. Still, additional testing is needed to determine the safety of the approach.

Future therapies could potentially be in pill form.

“We can effectively stop hepatitis C infection if this technology can be further developed for clinical use,” said Liu, who is a member of The UF Shands Cancer Center.

The UF nanoparticle design takes inspiration from the Nobel prize-winning discovery of a process in the body in which one part of a two-component complex destroys the genetic instructions for manufacturing protein, and the other part serves to hold off the body’s immune system attacks. This complex controls many naturally occurring processes in the body, so drugs that imitate it have the potential to hijack the production of proteins needed for normal function. The UF-developed therapy tricks the body into accepting it as part of the normal processes, but does not interfere with those processes.

“They’ve developed a nanoparticle that mimics a complex biological machine — that’s quite a powerful thing,” said nanoparticle expert Dr. C. Shad Thaxton, an assistant professor of urology at the Feinberg School of Medicine at Northwestern University and co-founder of the biotechnology company AuraSense LLC, who was not involved in the UF study. “The promise of nanotechnology is extraordinary. It will have a real and significant impact on how we practice medicine.”

Source: University of Florida

Posted by

Unknown

0

comments

Labels:

Education,

Medical,

Nanotechnology,

Robotic Technology

The Artificial Finger: New ultracapacitor delivers a jolt of energy at a constant voltage

Engineerblogger

June 19, 2012

To touch something is to understand it – emotionally and cognitive. It´s one of our important six senses, which we use and need in our daily lives. But accidents or illnesses can disrupt us from our sense of touch

To touch something is to understand it – emotionally and cognitive. It´s one of our important six senses, which we use and need in our daily lives. But accidents or illnesses can disrupt us from our sense of touch.

Now European researchers of the projects NanoBioTact and NanoBioTouch delve deep into the mysteries of touch and have developed the first sensitive artificial finger.

The main scientific aims of the projects are to radically improve understanding of the human mechano-transduction system and tissue engineered nanobiosensors. Therefore an international and multi disciplinary team of 13 scientific institutes, universities and companies put their knowledge together. “There are many potential applications of biometric tactile sensoring, for example in prosthetic limbs where you´ve got neuro-coupling which allows the limb to sense objects and also to feed back to the brain, to control the limb. Another area would be in robotics where you might want the capability to have sense the grip of objects, or intelligent haptic exploration of surfaces for example”, says Prof. Michael Adams, the coordinator of NanoBioTact.

The scientists have already developed a prototype of the first sensitive artificial finger. It works with an array of pressure sensors that mimic the spatial resolution, sensitivity and dynamics of human neural tactile sensors and can be directly connected to the central nervous system. Combined with an artificial skin that mimics a human fingerprint, the device´s sensitivity to vibrations is improved. Depending on the quality of a textured surface, the biomimetic finger vibrates in different ways, when it slides across the surface. Thereby it produces different signals and once it will get used by patients, they could recognise if the surface is smooth or scratchy. “The sensors are working very much like the sensors are doing on your own finger”, says physicist Dr. Michael Ward from the School of Mechanical Engineering at the University of Birmingham.

Putting the biomimetic finger on artificial limbs would take prostheses to the next level. “Compared to the hand prostheses which are currently on the market, an integrated sense of touch would be a major improvement. It would be a truly modern and biometric device which would give the patient the feeling as if it belonged to his own body”, says Dr. Lucia Beccai from the Centre for Micro-Robotics at the Italian Institute for Technology. But till the artificial finger will be available on large scale a lot of tests will have to be done. Nevertheless with the combination of computer and cognitive sciences, nano- and biotechnology the projects NanoBioTact and NanoBioTouch have already brought us a big step closer to artificial limbs with sensitive fingers!

Source: Youris

To touch something is to understand it – emotionally and cognitive. It´s one of our important six senses, which we use and need in our daily lives. But accidents or illnesses can disrupt us from our sense of touch

To touch something is to understand it – emotionally and cognitive. It´s one of our important six senses, which we use and need in our daily lives. But accidents or illnesses can disrupt us from our sense of touch.

Now European researchers of the projects NanoBioTact and NanoBioTouch delve deep into the mysteries of touch and have developed the first sensitive artificial finger.

The main scientific aims of the projects are to radically improve understanding of the human mechano-transduction system and tissue engineered nanobiosensors. Therefore an international and multi disciplinary team of 13 scientific institutes, universities and companies put their knowledge together. “There are many potential applications of biometric tactile sensoring, for example in prosthetic limbs where you´ve got neuro-coupling which allows the limb to sense objects and also to feed back to the brain, to control the limb. Another area would be in robotics where you might want the capability to have sense the grip of objects, or intelligent haptic exploration of surfaces for example”, says Prof. Michael Adams, the coordinator of NanoBioTact.

The scientists have already developed a prototype of the first sensitive artificial finger. It works with an array of pressure sensors that mimic the spatial resolution, sensitivity and dynamics of human neural tactile sensors and can be directly connected to the central nervous system. Combined with an artificial skin that mimics a human fingerprint, the device´s sensitivity to vibrations is improved. Depending on the quality of a textured surface, the biomimetic finger vibrates in different ways, when it slides across the surface. Thereby it produces different signals and once it will get used by patients, they could recognise if the surface is smooth or scratchy. “The sensors are working very much like the sensors are doing on your own finger”, says physicist Dr. Michael Ward from the School of Mechanical Engineering at the University of Birmingham.

Putting the biomimetic finger on artificial limbs would take prostheses to the next level. “Compared to the hand prostheses which are currently on the market, an integrated sense of touch would be a major improvement. It would be a truly modern and biometric device which would give the patient the feeling as if it belonged to his own body”, says Dr. Lucia Beccai from the Centre for Micro-Robotics at the Italian Institute for Technology. But till the artificial finger will be available on large scale a lot of tests will have to be done. Nevertheless with the combination of computer and cognitive sciences, nano- and biotechnology the projects NanoBioTact and NanoBioTouch have already brought us a big step closer to artificial limbs with sensitive fingers!

Source: Youris

Posted by

Unknown

0

comments

Tuesday 10 July 2012

Keeping electric vehicle batteries cool

Engineerblogger

July 10, 2012

Heat can damage the batteries of electric vehicles – even just driving fast on the freeway in summer temperatures can overheat the battery. An innovative new coolant conducts heat away from the battery three times more effectively than water, keeping the battery temperature within an acceptable range even in extreme driving situations.

Batteries provide the “fuel” that drives electric cars – in effect, the vehicles’ lifeblood. If batteries are to have a long service life, overheating must be avoided. A battery’s “comfort zone” lies between 20°C and 35°C. But even a Sunday drive in the midday heat of summer can push a battery’s temperature well beyond that range. The damage caused can be serious: operating a battery at a temperature of 45°C instead of 35°C halves its service life. And batteries are expensive – a new one can cost as much as half the price of the entire vehicle. That is why it is so important to keep them cool. Thus far, conventional cooling systems have not reached their full potential: either the batteries are not cooled at all – which is the case with ones that are simply exchanged for a fully charged battery at the “service station” – or they are air cooled. But air can absorb only very little heat and is also a poor conductor of it. What’s more, air cooling requires big spaces between the battery’s cells to allow sufficient fresh air to circulate between them. Water-cooling systems are still in their infancy. Though their thermal capacity exceeds that of air-cooling systems and they are better at conducting away heat, their downside is the limited supply of water in the system compared with the essentially limitless amount of air that can flow through a battery.

More space under the hood

In future, another option will be available for keeping batteries cool – a coolant by the name of CryoSolplus. It is a dispersion that mixes water and paraffin along with stabilizing tensides and a dash of the anti-freeze agent glycol. The advantage is that CryoSolplus can absorb three times as much heat as water, and functions better as a buffer in extreme situations such as trips on the freeway at the height of summer. This means that the holding tank for the coolant can be much smaller than those of watercooling systems – saving both weight and space under the hood. In addition, CryoSolplus is good at conducting away heat, moving it very quickly from the battery cells into the coolant. With additional costs of just 50 to 100 euros, the new cooling system is only marginally more expensive than water cooling. The coolant was developed by researchers at the Fraunhofer Institute for Environmental, Safety and Energy Technology UMSICHT in Oberhausen.

As CryoSolplus absorbs heat, the solid paraffin droplets within it melt, storing the heat in the process. When the solution cools, the droplets revert to their solid form. Scientists call such substances phase change materials or PCMs. “The main problem we had to overcome during development was to make the dispersion stable,” explains Dipl.-Ing. Tobias Kappels, a scientist at UMSICHT. The individual solid droplets of paraffin had to be prevented from agglomerating or – as they are lighter than water – collecting on the surface of the dispersion. They need to be evenly distributed throughout the water. Tensides serve to stabilize the dispersion, depositing themselves on the paraffin droplets and forming a type of protective coating. “To find out which tensides are best suited to this purpose, we examined the dispersion in three different stress situations: How long can it be stored without deteriorating? How well does it withstand mechanical stresses such as being pumped through pipes? And how stable is it when exposed to thermal stresses, for instance when the paraffin particles freeze and then thaw again?” says Kappels. Other properties of the dispersion that the researchers are optimizing include its heat capacity, its ability to transfer heat and its flow capability. The scientists’ next task will be to carry out field tests, trying out the coolant in an experimental vehicle.

Source: Fraunhofer-Gesellschaft

July 10, 2012

Heat can damage the batteries of electric vehicles – even just driving fast on the freeway in summer temperatures can overheat the battery. An innovative new coolant conducts heat away from the battery three times more effectively than water, keeping the battery temperature within an acceptable range even in extreme driving situations.

Batteries provide the “fuel” that drives electric cars – in effect, the vehicles’ lifeblood. If batteries are to have a long service life, overheating must be avoided. A battery’s “comfort zone” lies between 20°C and 35°C. But even a Sunday drive in the midday heat of summer can push a battery’s temperature well beyond that range. The damage caused can be serious: operating a battery at a temperature of 45°C instead of 35°C halves its service life. And batteries are expensive – a new one can cost as much as half the price of the entire vehicle. That is why it is so important to keep them cool. Thus far, conventional cooling systems have not reached their full potential: either the batteries are not cooled at all – which is the case with ones that are simply exchanged for a fully charged battery at the “service station” – or they are air cooled. But air can absorb only very little heat and is also a poor conductor of it. What’s more, air cooling requires big spaces between the battery’s cells to allow sufficient fresh air to circulate between them. Water-cooling systems are still in their infancy. Though their thermal capacity exceeds that of air-cooling systems and they are better at conducting away heat, their downside is the limited supply of water in the system compared with the essentially limitless amount of air that can flow through a battery.

More space under the hood

In future, another option will be available for keeping batteries cool – a coolant by the name of CryoSolplus. It is a dispersion that mixes water and paraffin along with stabilizing tensides and a dash of the anti-freeze agent glycol. The advantage is that CryoSolplus can absorb three times as much heat as water, and functions better as a buffer in extreme situations such as trips on the freeway at the height of summer. This means that the holding tank for the coolant can be much smaller than those of watercooling systems – saving both weight and space under the hood. In addition, CryoSolplus is good at conducting away heat, moving it very quickly from the battery cells into the coolant. With additional costs of just 50 to 100 euros, the new cooling system is only marginally more expensive than water cooling. The coolant was developed by researchers at the Fraunhofer Institute for Environmental, Safety and Energy Technology UMSICHT in Oberhausen.

As CryoSolplus absorbs heat, the solid paraffin droplets within it melt, storing the heat in the process. When the solution cools, the droplets revert to their solid form. Scientists call such substances phase change materials or PCMs. “The main problem we had to overcome during development was to make the dispersion stable,” explains Dipl.-Ing. Tobias Kappels, a scientist at UMSICHT. The individual solid droplets of paraffin had to be prevented from agglomerating or – as they are lighter than water – collecting on the surface of the dispersion. They need to be evenly distributed throughout the water. Tensides serve to stabilize the dispersion, depositing themselves on the paraffin droplets and forming a type of protective coating. “To find out which tensides are best suited to this purpose, we examined the dispersion in three different stress situations: How long can it be stored without deteriorating? How well does it withstand mechanical stresses such as being pumped through pipes? And how stable is it when exposed to thermal stresses, for instance when the paraffin particles freeze and then thaw again?” says Kappels. Other properties of the dispersion that the researchers are optimizing include its heat capacity, its ability to transfer heat and its flow capability. The scientists’ next task will be to carry out field tests, trying out the coolant in an experimental vehicle.

Source: Fraunhofer-Gesellschaft

Posted by

Unknown

0

comments

Graphene Repairs Holes By Knitting Itself Back Together, Say Physicists

Engineerblogger

July 10, 2012

The graphene revolution is upon us. If the visionaries are to be believed, the next generation of more or less everything is going to be based on this wonder material--sensors, actuators, transistors and information processors and so on. There seems little that graphene can't do.

But there's one fly in the ointment. Nobody has yet worked out how to make graphene in large, reliable quantities or how to carve and grow it into the shapes necessary for the next generation of devices.

That's largely because it's tricky growing anything into a layer only a single atom thick. But for carbon, it's all the more difficult because of this element's affinity to other atoms, including itself. A carbon sheet will happily curl up and form a tube or a ball or some more exotic shape. It will also react with other atoms nearby, which prevents growth and can even tear graphene apart.

So a better understanding of the way a graphene sheet interacts with itself and its environment is crucial if physicists are ever going to tame this stuff.

Enter Konstantin Novoselov at the University of Manchester and a few pals who have spent more than a few hours staring at graphene sheets through an electron microscope to see how it behaves.

Today, these guys say they've discovered why graphene appears so unpredictable. It turns out that if you make a hole in graphene, the material automatically knits itself back together again.

Novoselov and co made their discovery by etching tiny holes into a graphene sheet using an electron beam and watching what happens next using an electron microscope. They also added a few atoms of palladium or nickel, which catalyse the dissociation of carbon bonds and bind to the edges of the holes making them stable.

They found that the size of the holes depended on the number of metal atoms they added--more metal atoms can stabilise bigger holes.

But here's the curious thing. If they also added extra carbon atoms to the mix, these displaced the the metal atoms and reknitted the holes back together again.

Novoselov and co say the structure of the repaired area depends on the form in which the carbon is available. So when available as a hydrocarbon, the repairs tend to contain non-hexagonal defects where foreign atoms have entered the structure.

But when the carbon is available in pure form, the repairs are perfect and form pristine graphene.

That's important because it immediately suggests a way to grow graphene into almost any shape using the careful injection of metal and carbon atoms.

But there are significant challenges ahead. One important question is how quickly these processes occur and whether they can be controlled with the precision and reliability necessary for device manufacture.

Novoselov is a world leader in this area and the joint recipient of the Nobel Prize for physics in 2010 for his early work on graphene. He and his team are well set up to solve this and various related questions.

But with the future of computing (and almost everything else) at stake, there's bound to be plenty of competitors snapping at their heels.

Source: Technology Review

Additional Information:

July 10, 2012

The graphene revolution is upon us. If the visionaries are to be believed, the next generation of more or less everything is going to be based on this wonder material--sensors, actuators, transistors and information processors and so on. There seems little that graphene can't do.

But there's one fly in the ointment. Nobody has yet worked out how to make graphene in large, reliable quantities or how to carve and grow it into the shapes necessary for the next generation of devices.

That's largely because it's tricky growing anything into a layer only a single atom thick. But for carbon, it's all the more difficult because of this element's affinity to other atoms, including itself. A carbon sheet will happily curl up and form a tube or a ball or some more exotic shape. It will also react with other atoms nearby, which prevents growth and can even tear graphene apart.

So a better understanding of the way a graphene sheet interacts with itself and its environment is crucial if physicists are ever going to tame this stuff.

Enter Konstantin Novoselov at the University of Manchester and a few pals who have spent more than a few hours staring at graphene sheets through an electron microscope to see how it behaves.

Today, these guys say they've discovered why graphene appears so unpredictable. It turns out that if you make a hole in graphene, the material automatically knits itself back together again.

Novoselov and co made their discovery by etching tiny holes into a graphene sheet using an electron beam and watching what happens next using an electron microscope. They also added a few atoms of palladium or nickel, which catalyse the dissociation of carbon bonds and bind to the edges of the holes making them stable.

They found that the size of the holes depended on the number of metal atoms they added--more metal atoms can stabilise bigger holes.

But here's the curious thing. If they also added extra carbon atoms to the mix, these displaced the the metal atoms and reknitted the holes back together again.

Novoselov and co say the structure of the repaired area depends on the form in which the carbon is available. So when available as a hydrocarbon, the repairs tend to contain non-hexagonal defects where foreign atoms have entered the structure.

But when the carbon is available in pure form, the repairs are perfect and form pristine graphene.

That's important because it immediately suggests a way to grow graphene into almost any shape using the careful injection of metal and carbon atoms.

But there are significant challenges ahead. One important question is how quickly these processes occur and whether they can be controlled with the precision and reliability necessary for device manufacture.

Novoselov is a world leader in this area and the joint recipient of the Nobel Prize for physics in 2010 for his early work on graphene. He and his team are well set up to solve this and various related questions.

But with the future of computing (and almost everything else) at stake, there's bound to be plenty of competitors snapping at their heels.

Source: Technology Review

Additional Information:

- Ref: arxiv.org/abs/1207.1487: Graphene Re-Knits Its Holes

Posted by

Unknown

0

comments

Labels:

Materials,

Nanotechnology,

Research and Development,

UK

Smart Headlight System Will Have Drivers Seeing Through the Rain: Shining Light Between Drops Makes Thunderstorm Seem Like a Drizzle

Engineerblogger

July 10, 2012

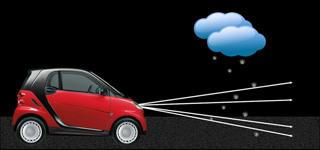

Drivers can struggle to see when driving at night in a rainstorm or snowstorm, but a smart headlight system invented by researchers at Carnegie Mellon University's Robotics Institute can improve visibility by constantly redirecting light to shine between particles of precipitation.

The system, demonstrated in laboratory tests, prevents the distracting and sometimes dangerous glare that occurs when headlight beams are reflected by precipitation back toward the driver.

"If you're driving in a thunderstorm, the smart headlights will make it seem like it's a drizzle," said Srinivasa Narasimhan, associate professor of robotics.

The system uses a camera to track the motion of raindrops and snowflakes and then applies a computer algorithm to predict where those particles will be just a few milliseconds later. The light projection system then adjusts to deactivate light beams that would otherwise illuminate the particles in their predicted positions.

"A human eye will not be able to see that flicker of the headlights," Narasimhan said. "And because the precipitation particles aren't being illuminated, the driver won't see the rain or snow either."

To people, rain can appear as elongated streaks that seem to fill the air. To high-speed cameras, however, rain consists of sparsely spaced, discrete drops. That leaves plenty of space between the drops where light can be effectively distributed if the system can respond rapidly, Narasimhan said.

In their lab tests, Narasimhan and his research team demonstrated that their system could detect raindrops, predict their movement and adjust a light projector accordingly in 13 milliseconds. At low speeds, such a system could eliminate 70 to 80 percent of visible rain during a heavy storm, while losing only 5 or 6 percent of the light from the headlamp.

To operate at highway speeds and to work effectively in snow and hail, the system's response will need to be reduced to just a few milliseconds, Narasimhan said. The lab tests have demonstrated the feasibility of the system, however, and the researchers are confident that the speed of the system can be boosted.

The test apparatus, for instance, couples a camera with an off-the-shelf DLP projector. Road-worthy systems likely would be based on arrays of light-emitting diode (LED) light sources in which individual elements could be turned on or off, depending on the location of raindrops. New LED technology could make it possible to combine LED light sources with image sensors on a single chip, enabling high-speed operation at low cost.

Narasimhan's team is now engineering a more compact version of the smart headlight that in coming years could be installed in a car for road testing.

Though a smart headlight system will never be able to eliminate all precipitation from the driver's field of view, simply reducing the amount of reflection and distortion caused by precipitation can substantially improve visibility and reduce driver distraction. Another benefit is that the system also can detect oncoming cars and direct the headlight beams away from the eyes of those drivers, eliminating the need to shift from high to low beams.

"One good thing is that the system will not fail in a catastrophic way," Narasimhan said. "If it fails, it is just a normal headlight."

This research was sponsored by the Office of Naval Research, the National Science Foundation, the Samsung Advanced Institute of Technology and Intel Corp. Collaborators include Takeo Kanade, professor of computer science and robotics; Anthony Rowe, assistant research professor of electrical and computer engineering; Robert Tamburo, Robotics Institute project scientist; Peter Barnum, a former robotics Ph.D. student now with Texas Instruments; and Raoul de Charette, a visiting Ph.D. student from Mines ParisTech, France.

Source: Carnegie Mellon University

July 10, 2012

Drivers can struggle to see when driving at night in a rainstorm or snowstorm, but a smart headlight system invented by researchers at Carnegie Mellon University's Robotics Institute can improve visibility by constantly redirecting light to shine between particles of precipitation.

The system, demonstrated in laboratory tests, prevents the distracting and sometimes dangerous glare that occurs when headlight beams are reflected by precipitation back toward the driver.

"If you're driving in a thunderstorm, the smart headlights will make it seem like it's a drizzle," said Srinivasa Narasimhan, associate professor of robotics.

The system uses a camera to track the motion of raindrops and snowflakes and then applies a computer algorithm to predict where those particles will be just a few milliseconds later. The light projection system then adjusts to deactivate light beams that would otherwise illuminate the particles in their predicted positions.

"A human eye will not be able to see that flicker of the headlights," Narasimhan said. "And because the precipitation particles aren't being illuminated, the driver won't see the rain or snow either."

To people, rain can appear as elongated streaks that seem to fill the air. To high-speed cameras, however, rain consists of sparsely spaced, discrete drops. That leaves plenty of space between the drops where light can be effectively distributed if the system can respond rapidly, Narasimhan said.

In their lab tests, Narasimhan and his research team demonstrated that their system could detect raindrops, predict their movement and adjust a light projector accordingly in 13 milliseconds. At low speeds, such a system could eliminate 70 to 80 percent of visible rain during a heavy storm, while losing only 5 or 6 percent of the light from the headlamp.

To operate at highway speeds and to work effectively in snow and hail, the system's response will need to be reduced to just a few milliseconds, Narasimhan said. The lab tests have demonstrated the feasibility of the system, however, and the researchers are confident that the speed of the system can be boosted.

The test apparatus, for instance, couples a camera with an off-the-shelf DLP projector. Road-worthy systems likely would be based on arrays of light-emitting diode (LED) light sources in which individual elements could be turned on or off, depending on the location of raindrops. New LED technology could make it possible to combine LED light sources with image sensors on a single chip, enabling high-speed operation at low cost.

Narasimhan's team is now engineering a more compact version of the smart headlight that in coming years could be installed in a car for road testing.

Though a smart headlight system will never be able to eliminate all precipitation from the driver's field of view, simply reducing the amount of reflection and distortion caused by precipitation can substantially improve visibility and reduce driver distraction. Another benefit is that the system also can detect oncoming cars and direct the headlight beams away from the eyes of those drivers, eliminating the need to shift from high to low beams.

"One good thing is that the system will not fail in a catastrophic way," Narasimhan said. "If it fails, it is just a normal headlight."

This research was sponsored by the Office of Naval Research, the National Science Foundation, the Samsung Advanced Institute of Technology and Intel Corp. Collaborators include Takeo Kanade, professor of computer science and robotics; Anthony Rowe, assistant research professor of electrical and computer engineering; Robert Tamburo, Robotics Institute project scientist; Peter Barnum, a former robotics Ph.D. student now with Texas Instruments; and Raoul de Charette, a visiting Ph.D. student from Mines ParisTech, France.

Source: Carnegie Mellon University

Posted by

Unknown

0

comments

Nanodevice builds electricity from tiny pieces

Engineerblogger

July 10, 2012

A team of scientists at the National Physical Laboratory (NPL) and University of Cambridge has made a significant advance in using nano-devices to create accurate electrical currents. Electrical current is composed of billions and billions of tiny particles called electrons. They have developed an electron pump - a nano-device - which picks these electrons up one at a time and moves them across a barrier, creating a very well-defined electrical current.

The device drives electrical current by manipulating individual electrons, one-by-one at very high speed. This technique could replace the traditional definition of electrical current, the ampere, which relies on measurements of mechanical forces on current-carrying wires.

The key breakthrough came when scientists experimented with the exact shape of the voltage pulses that control the trapping and ejection of electrons. By changing the voltage slowly while trapping electrons, and then much more rapidly when ejecting them, it was possible to massively speed up the overall rate of pumping without compromising the accuracy.

By employing this technique, the team were able to pump almost a billion electrons per second, 300 times faster than the previous record for an accurate electron pump set at the National Institute of Standards and Technology (NIST) in the USA in 1996.

Although the resulting current of 150 picoamperes is small (ten billion times smaller than the current used when boiling a kettle), the team were able to measure the current with an accuracy of one part-per-million, confirming that the electron pump was accurate at this level. This result is a milestone in the precise, fast, manipulation of single electrons and an important step towards a re-definition of the unit ampere.

As reported in Nature Communications, the team used a nano-scale semiconductor device called a 'quantum dot' to pump electrons through a circuit. The quantum dot is a tiny electrostatic trap less than 0.0001 mm wide. The shape of the quantum dot is controlled by voltages applied to nearby electrodes.

The dot can be filled with electrons and then raised in energy. By a process known as 'back-tunneling', all but one of the electrons fall out of the quantum dot back into the source lead. Ideally, just one electron remains trapped in the dot, which is ejected into the output lead by tilting the trap. When this is repeated rapidly this gives a current determined solely by the repetition rate and the charge on each electron - a universal constant of nature and the same for all electrons.

The research makes significant steps towards redefining the ampere by developing the application of an electron pump which improves accuracy rates in primary electrical measurement.

Masaya Kataoka of the Quantum Detection Group at NPL explains:

"Our device is like a water pump in that it produces a flow by a cyclical action. The tricky part is making sure that exactly the same number of electronic charge is transported in each cycle.

The way that the electrons in our device behave is quite similar to water; if you try and scoop up a fixed volume of water, say in a cup or spoon, you have to move slowly otherwise you'll spill some. This is exactly what used to happen to our electrons if we went too fast."

Stephen Giblin also part of the Quantum Detection Group, added:

"For the last few years, we have worked on optimising the design of our device, but we made a huge leap forward when we fine-tuned the timing sequence. We've basically smashed the record for the largest accurate single-electron current by a factor of 300.

Although moving electrons one at a time is not new, we can do it much faster, and with very high reliability - a billion electrons per second, with an accuracy of less than one error in a million operations.

Using mechanical forces to define the ampere has made a lot of sense for the last 60 or so years, but now that we have the nanotechnology to control single electrons we can move on.

The technology might seem more complicated, but actually a quantum system of measurement is more elegant, because you are basing your system on fundamental constants of nature, rather than things which we know aren't really constant, like the mass of the standard kilogram."

Source: National Physical Laboratory

Additional Information:

July 10, 2012

A team of scientists at the National Physical Laboratory (NPL) and University of Cambridge has made a significant advance in using nano-devices to create accurate electrical currents. Electrical current is composed of billions and billions of tiny particles called electrons. They have developed an electron pump - a nano-device - which picks these electrons up one at a time and moves them across a barrier, creating a very well-defined electrical current.

The device drives electrical current by manipulating individual electrons, one-by-one at very high speed. This technique could replace the traditional definition of electrical current, the ampere, which relies on measurements of mechanical forces on current-carrying wires.

The key breakthrough came when scientists experimented with the exact shape of the voltage pulses that control the trapping and ejection of electrons. By changing the voltage slowly while trapping electrons, and then much more rapidly when ejecting them, it was possible to massively speed up the overall rate of pumping without compromising the accuracy.

By employing this technique, the team were able to pump almost a billion electrons per second, 300 times faster than the previous record for an accurate electron pump set at the National Institute of Standards and Technology (NIST) in the USA in 1996.

Although the resulting current of 150 picoamperes is small (ten billion times smaller than the current used when boiling a kettle), the team were able to measure the current with an accuracy of one part-per-million, confirming that the electron pump was accurate at this level. This result is a milestone in the precise, fast, manipulation of single electrons and an important step towards a re-definition of the unit ampere.

As reported in Nature Communications, the team used a nano-scale semiconductor device called a 'quantum dot' to pump electrons through a circuit. The quantum dot is a tiny electrostatic trap less than 0.0001 mm wide. The shape of the quantum dot is controlled by voltages applied to nearby electrodes.

The dot can be filled with electrons and then raised in energy. By a process known as 'back-tunneling', all but one of the electrons fall out of the quantum dot back into the source lead. Ideally, just one electron remains trapped in the dot, which is ejected into the output lead by tilting the trap. When this is repeated rapidly this gives a current determined solely by the repetition rate and the charge on each electron - a universal constant of nature and the same for all electrons.

The research makes significant steps towards redefining the ampere by developing the application of an electron pump which improves accuracy rates in primary electrical measurement.

Masaya Kataoka of the Quantum Detection Group at NPL explains:

"Our device is like a water pump in that it produces a flow by a cyclical action. The tricky part is making sure that exactly the same number of electronic charge is transported in each cycle.

The way that the electrons in our device behave is quite similar to water; if you try and scoop up a fixed volume of water, say in a cup or spoon, you have to move slowly otherwise you'll spill some. This is exactly what used to happen to our electrons if we went too fast."

Stephen Giblin also part of the Quantum Detection Group, added:

"For the last few years, we have worked on optimising the design of our device, but we made a huge leap forward when we fine-tuned the timing sequence. We've basically smashed the record for the largest accurate single-electron current by a factor of 300.

Although moving electrons one at a time is not new, we can do it much faster, and with very high reliability - a billion electrons per second, with an accuracy of less than one error in a million operations.

Using mechanical forces to define the ampere has made a lot of sense for the last 60 or so years, but now that we have the nanotechnology to control single electrons we can move on.

The technology might seem more complicated, but actually a quantum system of measurement is more elegant, because you are basing your system on fundamental constants of nature, rather than things which we know aren't really constant, like the mass of the standard kilogram."

Source: National Physical Laboratory

Additional Information:

- Nature Communications ("Towards a quantum representation of the ampere using single electron pumps")

Posted by

Unknown

0

comments

Labels:

Education,

Manufacturing,

Materials,

Nanotechnology,

Research and Development,

UK

How do you turn 10 minutes of power into 200? Efficiency, efficiency, efficiency.

Engineerbloggger

July 10, 2012

DARPA seeks revolutionary advances in the efficiency of robotic actuation; fundamental research into biology, physics and electrical engineering could benefit all engineered, actuated systems

A robot that drives into an industrial disaster area and shuts off a valve leaking toxic steam might save lives. A robot that applies supervised autonomy to dexterously disarm a roadside bomb would keep humans out of harm’s way. A robot that carries hundreds of pounds of equipment over rocky or wooded terrain would increase the range warfighters can travel and the speed at which they move. But a robot that runs out of power after ten to twenty minutes of operation is limited in its utility. In fact, use of robots in defense missions is currently constrained in part by power supply issues. DARPA has created the M3 Actuation program, with the goal of achieving a 2,000 percent increase in the efficiency of power transmission and application in robots, to improve performance potential.

Humans and animals have evolved to consume energy very efficiently for movement. Bones, muscles and tendons work together for propulsion using as little energy as possible. If robotic actuation can be made to approach the efficiency of human and animal actuation, the range of practical robotic applications will greatly increase and robot design will be less limited by power plant considerations.

M3 Actuation is an effort within DARPA’s Maximum Mobility and Manipulation (M3) robotics program, and adds a new dimension to DARPA’s suite of robotics research and development work.

“By exploring multiple aspects of robot design, capabilities, control and production, we hope to converge on an adaptable core of robot technologies that can be applied across mission areas,” said Gill Pratt, DARPA program manager. “Success in the M3 Actuation effort would benefit not just robotics programs, but all engineered, actuated systems, including advanced prosthetic limbs.”

Proposals are sought in response to a Broad Agency Announcement (BAA). DARPA expects that solutions will require input from a broad array of scientific and engineering specialties to understand, develop and apply actuation mechanisms inspired in part by humans and animals. Technical areas of interest include, but are not limited to: low-loss power modulation, variable recruitment of parallel transducer elements, high-bandwidth variable impedance matching, adaptive inertial and gravitational load cancellation, and high-efficiency power transmission between joints.

Research and development will cover two tracks of work:

While separate efforts, M3 Actuation will run in parallel with the DRC. In both programs DARPA seeks to develop the enabling technologies required for expanded practical use of robots in defense missions. Thus, performers on M3 Actuation will share their design approaches at the first DRC live competition scheduled for December 2013, and demonstrate their final systems at the second DRC live competition scheduled for December 2014.

Source: DARPA

July 10, 2012

DARPA seeks revolutionary advances in the efficiency of robotic actuation; fundamental research into biology, physics and electrical engineering could benefit all engineered, actuated systems

A robot that drives into an industrial disaster area and shuts off a valve leaking toxic steam might save lives. A robot that applies supervised autonomy to dexterously disarm a roadside bomb would keep humans out of harm’s way. A robot that carries hundreds of pounds of equipment over rocky or wooded terrain would increase the range warfighters can travel and the speed at which they move. But a robot that runs out of power after ten to twenty minutes of operation is limited in its utility. In fact, use of robots in defense missions is currently constrained in part by power supply issues. DARPA has created the M3 Actuation program, with the goal of achieving a 2,000 percent increase in the efficiency of power transmission and application in robots, to improve performance potential.

Humans and animals have evolved to consume energy very efficiently for movement. Bones, muscles and tendons work together for propulsion using as little energy as possible. If robotic actuation can be made to approach the efficiency of human and animal actuation, the range of practical robotic applications will greatly increase and robot design will be less limited by power plant considerations.

M3 Actuation is an effort within DARPA’s Maximum Mobility and Manipulation (M3) robotics program, and adds a new dimension to DARPA’s suite of robotics research and development work.

“By exploring multiple aspects of robot design, capabilities, control and production, we hope to converge on an adaptable core of robot technologies that can be applied across mission areas,” said Gill Pratt, DARPA program manager. “Success in the M3 Actuation effort would benefit not just robotics programs, but all engineered, actuated systems, including advanced prosthetic limbs.”

Proposals are sought in response to a Broad Agency Announcement (BAA). DARPA expects that solutions will require input from a broad array of scientific and engineering specialties to understand, develop and apply actuation mechanisms inspired in part by humans and animals. Technical areas of interest include, but are not limited to: low-loss power modulation, variable recruitment of parallel transducer elements, high-bandwidth variable impedance matching, adaptive inertial and gravitational load cancellation, and high-efficiency power transmission between joints.

Research and development will cover two tracks of work:

- Track 1 asks performer teams to develop and demonstrate high-efficiency actuation technology that will allow robots similar to the DARPA Robotics Challenge (DRC) Government Furnished Equipment (GFE) platform to have twenty times longer endurance than the DRC GFE when running on untethered battery power (currently only 10-20 minutes). Using Government Furnished Information about the GFE, M3 Actuation performers will have to build a robot that incorporates the new actuation technology. These robots will be demonstrated at, but not compete in, the second DRC live competition scheduled for December 2014.

- Track 2 will be tailored to performers who want to explore ways of improving the efficiency of actuators, but at scales both larger and smaller than applicable to the DRC GFE platform, and at technical readiness levels insufficient for incorporation into a platform during this program. Essentially, Track 2 seeks to advance the science and engineering behind actuation without the requirement to apply it at this point.

While separate efforts, M3 Actuation will run in parallel with the DRC. In both programs DARPA seeks to develop the enabling technologies required for expanded practical use of robots in defense missions. Thus, performers on M3 Actuation will share their design approaches at the first DRC live competition scheduled for December 2013, and demonstrate their final systems at the second DRC live competition scheduled for December 2014.

Source: DARPA

Posted by

Unknown

0

comments

Hypersonic - The new stealth

Engineerblogger

July 10, 2012

DARPA’s research and development in stealth technology during the 1970s and 1980s led to the world’s most advanced radar-evading aircraft, providing strategic national security advantage to the United States. Today, that strategic advantage is threatened as other nations’ abilities in stealth and counter-stealth improve. Restoring that battle space advantage requires advanced speed, reach and range. Hypersonic technologies have the potential to provide the dominance once afforded by stealth to support a range of varied future national security missions.

Extreme hypersonic flight at Mach 20 (i.e., 20 times the speed of sound)—which would enable DoD to get anywhere in the world in under an hour—is an area of research where significant scientific advancements have eluded researchers for decades. Thanks to programs by DARPA, the Army, and the Air Force in recent years, however, more information has been obtained about this challenging subject.

“DoD’s hypersonic technology efforts have made significant advancements in our technical understanding of several critical areas including aerodynamics; aerothermal effects; and guidance, navigation and control,” said Acting DARPA Director, Kaigham J. Gabriel. “but additional unknowns exist.”

Tackling remaining unknowns for DoD hypersonics efforts is the focus of the new DARPA Integrated Hypersonics (IH) program. “History is rife with examples of different designs for ‘flying vehicles’ and approaches to the traditional commercial flight we all take for granted today,” explained Gabriel. “For an entirely new type of flight—extreme hypersonic—diverse solutions, approaches and perspectives informed by the knowledge gained from DoD’s previous efforts are critical to achieving our goals.”

To encourage this diversity, DARPA will host a Proposers’ Day on August 14, 2012, to detail the technical areas for which proposals are sought through an upcoming competitive broad agency announcement.

“We do not yet have a complete hypersonic system solution,” said Gregory Hulcher, director of Strategic Warfare, Office of the Under Secretary of Defense for Acquisition, Technology and Logistics. “Programs like Integrated Hypersonics will leverage previous investments in this field and continue to reduce risk, inform development, and advance capabilities.”

The IH program expands hypersonic technology research to include five primary technical areas: thermal protection system and hot structures; aerodynamics; guidance, navigation, and control (GNC); range/instrumentation; and propulsion.

At Mach 20, vehicles flying inside the atmosphere experience intense heat, exceeding 3,500 degrees Fahrenheit, which is hotter than a blast furnace capable of melting steel, as well as extreme pressure on the aeroshell. The thermal protection materials and hot structures technology area aims to advance understanding of high-temperature material characteristics to withstand both high thermal and structural loads. Another goal is to optimize structural designs and manufacturing processes to enable faster production of high-mach aeroshells.

The aerodynamics technology area focuses on future vehicle designs for different missions and addresses the effects of adding vertical and horizontal stabilizers or other control surfaces for enhanced aero-control of the vehicle. Aerodynamics seeks technology solutions to ensure the vehicle effectively manages energy to be able to glide to its destination. Desired technical advances in the GNC technology area include advances in software to enable the vehicle to make real-time, in-flight adjustments to changing parameters, such as high-altitude wind gusts, to stay on an optimal flight trajectory.